NeuralScope Benchmark

Since 2017, Mobile devices enable AI capability by adopting advance deep learning model and powerful chips. Huawei Kirin970, Apple Bionic A11, and Mediatek P60 provide dedicate AI processor to achieve high performance and power efficient for mobile phones. Mobile phone vendor also develops several AI application to utilize this AI processor. NeuralScope App is a deep learning benchmarking tool for Android smartphones. You can check the deep learning computing performance of your device.

In the NeuralScope Benchmark, there are three common computer vision deep learning categories, classification, detection, and segmentation.

Classification - The deep learning based neural networks are able to recognize object classes for one or more given input photos. They can recognize 1000 different object classes. There are four models, mobilenet-V1, mobilenet-V2, Resnet-50, and Inception-V3, in our benchmarking App. Individually, we provide one float model and one quantized model for each network.

Detection - Now, you can perform object counting on your phone. The model we use is a combination of mobilenet, a light-weight classification model, and single shot multibox detector(SSD), an object detector does not resample pixels or feature maps for bounding box hypotheses, can detect 80 different object classes. It improves in speed for high-accuracy detection.

Segmentation - The task evaluates whether you can perform auto background removing for exchange scene on your phone. It can recognize 20 different object classes and segment the recognized object using different colors. The model is resnet-50 with atrous convolution layers embedded.

The NeuralScope Benchmark is a very useful tool that lets you check your devices whether they are deep learning computing supported.

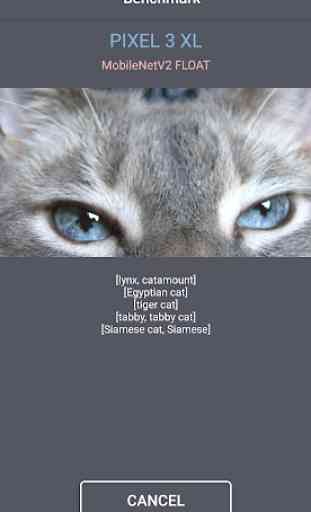

In the NeuralScope Benchmark, there are three common computer vision deep learning categories, classification, detection, and segmentation.

Classification - The deep learning based neural networks are able to recognize object classes for one or more given input photos. They can recognize 1000 different object classes. There are four models, mobilenet-V1, mobilenet-V2, Resnet-50, and Inception-V3, in our benchmarking App. Individually, we provide one float model and one quantized model for each network.

Detection - Now, you can perform object counting on your phone. The model we use is a combination of mobilenet, a light-weight classification model, and single shot multibox detector(SSD), an object detector does not resample pixels or feature maps for bounding box hypotheses, can detect 80 different object classes. It improves in speed for high-accuracy detection.

Segmentation - The task evaluates whether you can perform auto background removing for exchange scene on your phone. It can recognize 20 different object classes and segment the recognized object using different colors. The model is resnet-50 with atrous convolution layers embedded.

The NeuralScope Benchmark is a very useful tool that lets you check your devices whether they are deep learning computing supported.

Category : Tools

Related searches

Looks nice but to be really useful it should show some metrics like milliseconds per image or frames per second. Nevermind, I found that going into the custom selection menu it shows inference time in milliseconds. Great!